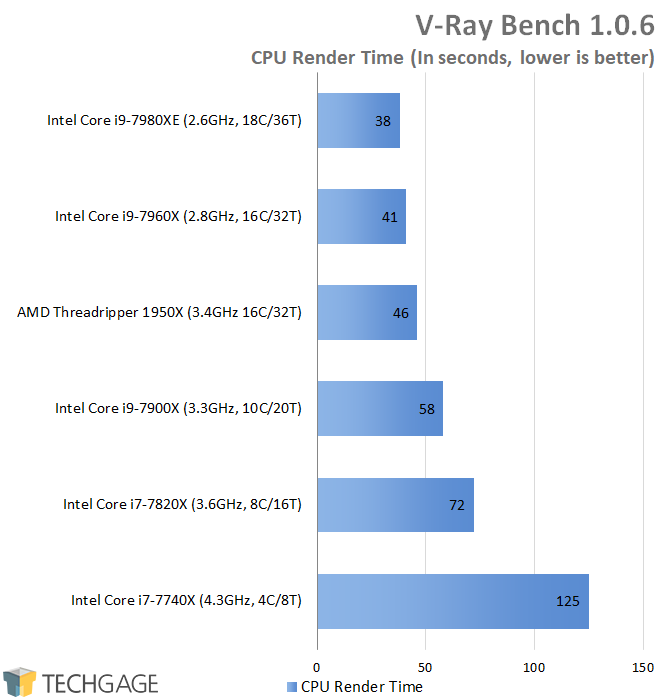

So now that we have a 32 core / 64 thread CPU confirmed and coming out in the next couple of months, is there a justification for investing in GPU rendering? Especially with the current GPU prices and availability and the fact that GPUs still don't support all CPU features and are usually limited by their onboard RAM. I read that the 16 core 1950X is on par with GTX 1080ti in terms of rendering speed so the 32 core Threadripper 2 would be about twice as powerful as a GTX 1080ti with none of the downsides of GPU rendering. Is my reasoning correct?

So with this trend, any reason to go the GPU route if one gets 2-3 of these 32 core CPUs? On top of that, rumor has it next year AMD will be releasing a 64 core / 128 thread CPU! That's insane if you ask me.

So with this trend, any reason to go the GPU route if one gets 2-3 of these 32 core CPUs? On top of that, rumor has it next year AMD will be releasing a 64 core / 128 thread CPU! That's insane if you ask me.

I guess the pressure or the reasons behind that both (RMan and Arnold) are pushing really hard on matching GPU and CPU results is that lots of big vfx houses aren't gonna use GPU farms for final frames yet, so it's quite important for GPU-lookdeved assets look very close to CPU farm output, so there are less to worry about or troubleshoot down the stream.

I guess the pressure or the reasons behind that both (RMan and Arnold) are pushing really hard on matching GPU and CPU results is that lots of big vfx houses aren't gonna use GPU farms for final frames yet, so it's quite important for GPU-lookdeved assets look very close to CPU farm output, so there are less to worry about or troubleshoot down the stream.

Comment